Credit Card Fraud Detection

Curious about the technology behind fraud prevention? Uncover the machine learning algorithms that can help protect your financial data.

Summary

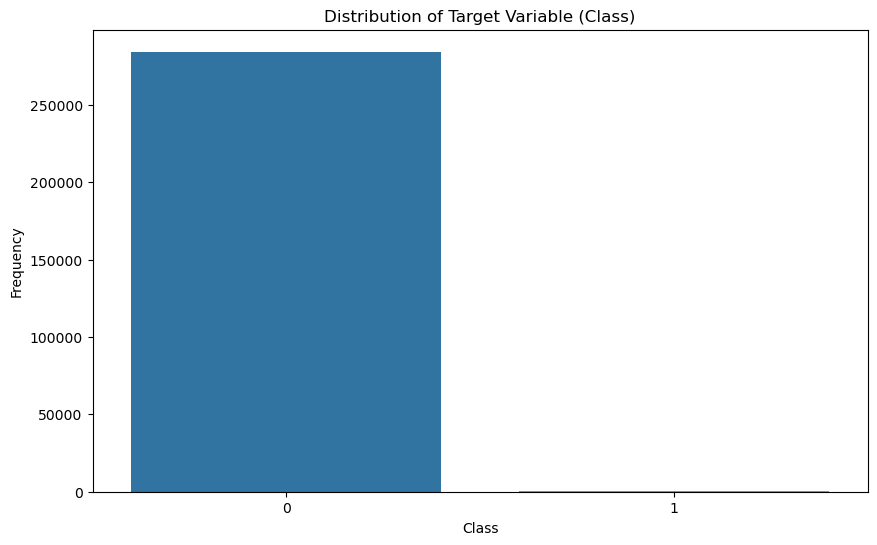

In this project, we developed a robust fraud detection model to identify fraudulent transactions within a highly imbalanced dataset. The primary goal was to enhance security and reliability for financial transactions by accurately distinguishing between genuine and fraudulent activities. This summary provides an overview of the project’s key features, methodologies, and practical implications. The full notebook and analysis with larger visualizations is available here.

Key Features

- Data Preprocessing:

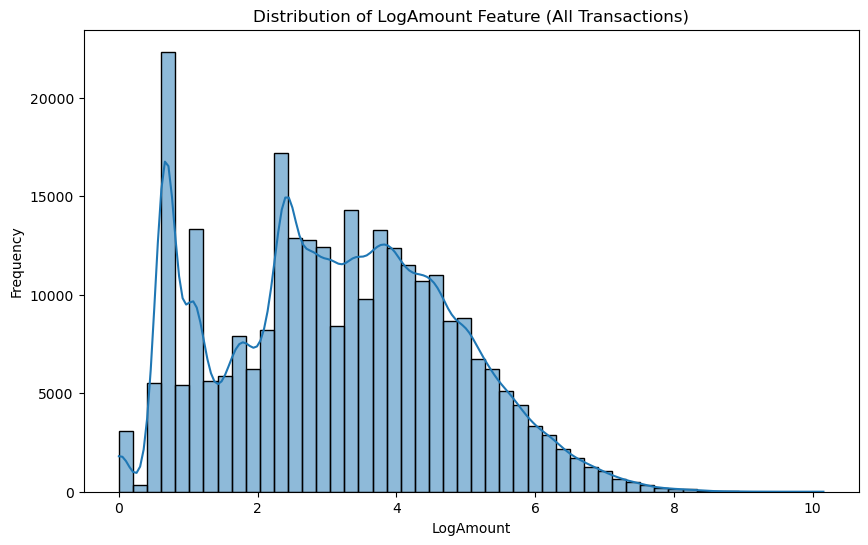

- Applied log transformation to the

Amountfeature to reduce skewness. - Standardized features using

RobustScalerfor consistent scaling. - Addressed class imbalance with SMOTE (Synthetic Minority Over-sampling Technique).

- Applied log transformation to the

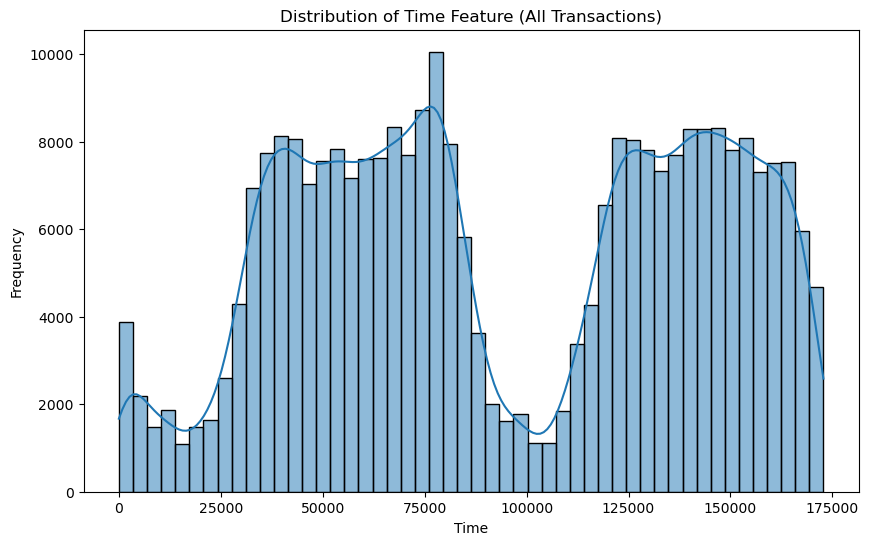

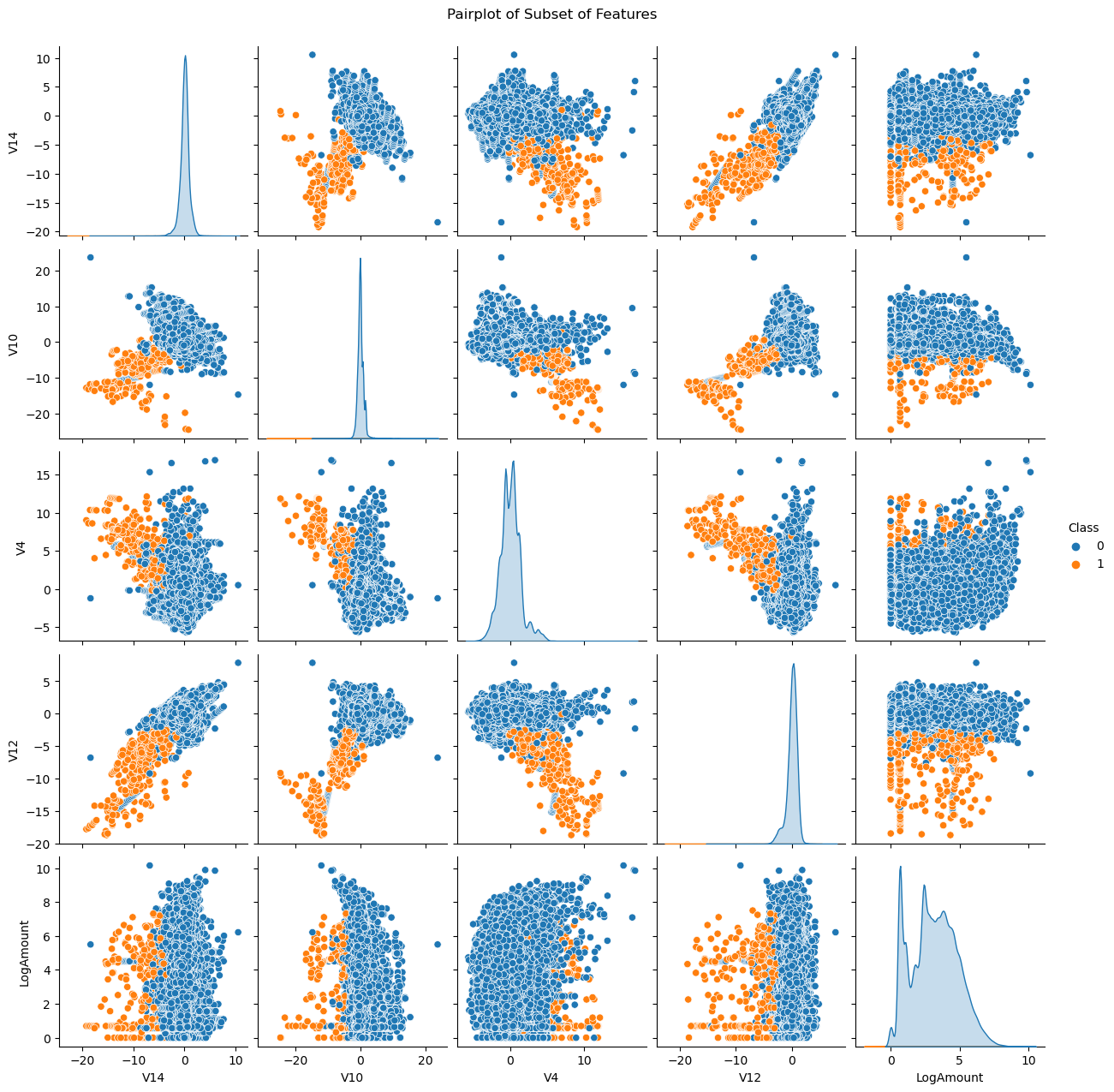

- Exploratory Data Analysis (EDA):

- Used visualizations like histograms, pairplots, and heatmaps to understand feature distributions and relationships.

- Identified key patterns differentiating fraudulent from non-fraudulent transactions.

- Model Selection and Hyperparameter Tuning:

- Evaluated basic Logistic Regression, Random Forest, Gradient Boosting, and XGBoost models.

- Performed hyperparameter tuning with GridSearchCV on Random Forest and XGBoost models, optimizing for average precision.

- Model Performance Evaluation:

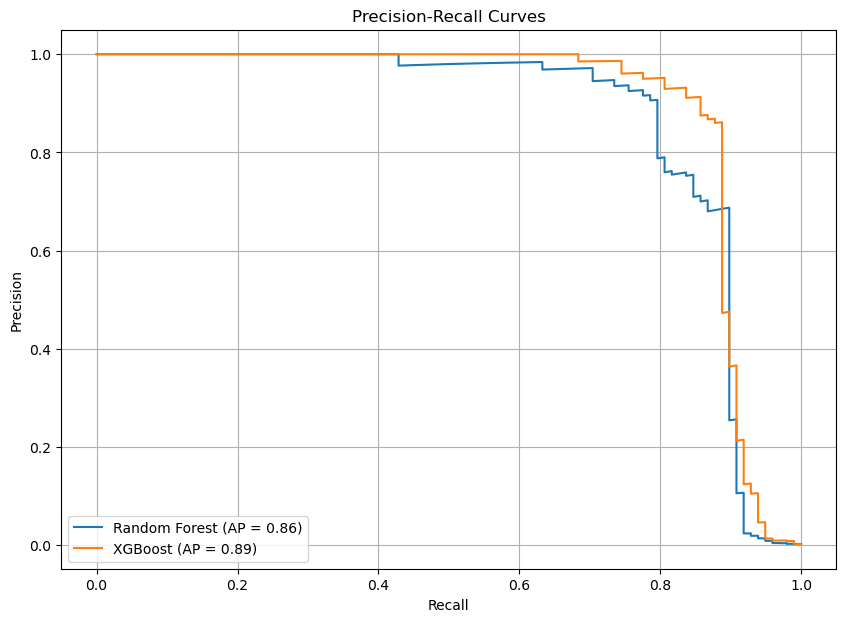

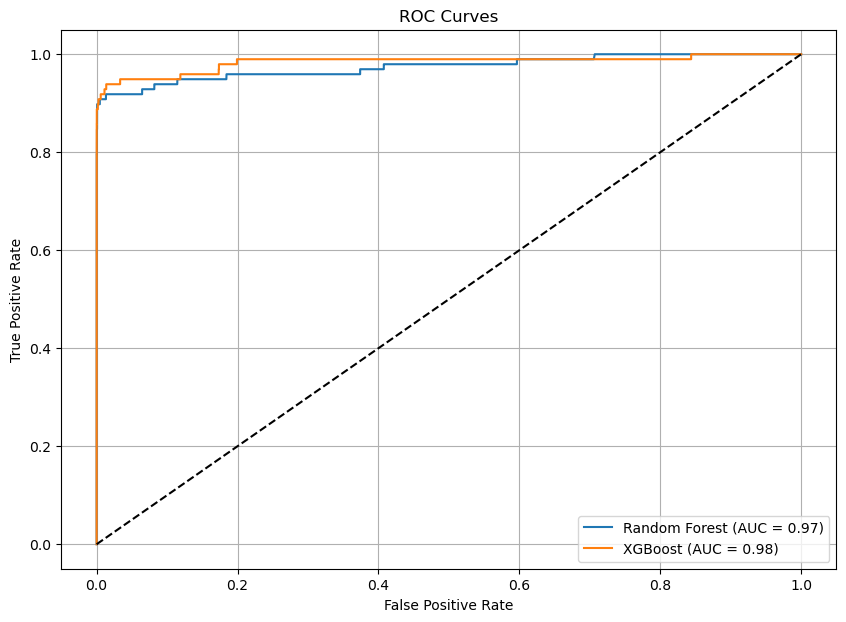

- Compared models using metrics such as Precision-Recall AUC, ROC AUC, and F1-score.

- Selected XGBoost as the final model due to its superior balance of precision and recall, achieving a Precision-Recall AUC of 0.89 and the best F1 score among tuned models. This choice ensures the model’s high accuracy and reliability in detecting fraudulent transactions.

Practical Implications

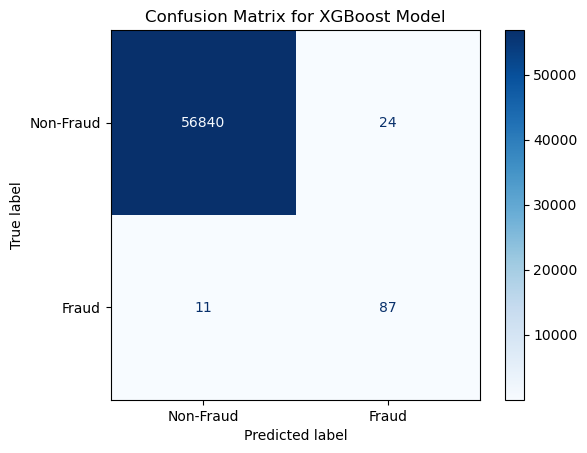

- High Accuracy and Reliability: The XGBoost classifier is highly effective in detecting fraudulent transactions, ensuring genuine transactions are processed smoothly.

- Customer Confidence: Provides a reliable system, minimizing interruptions to legitimate transactions.

- Continuous Improvement: Emphasizes ongoing monitoring and enhancement to adapt to new fraud patterns.

Visual Highlights

Tuned Model Performance

Tuned Model Results

| Model | Training Time (s) | ROC AUC | Average Precision | PRC AUC | Precision | Recall | F1-score |

|---|---|---|---|---|---|---|---|

| Random Forest | 488.24 | 0.97 | 0.86 | 0.86 | 0.73 | 0.85 | 0.79 |

| XGBoost | 3.72 | 0.98 | 0.89 | 0.89 | 0.78 | 0.88 | 0.83 |

Final Model: XGBoost

XGBoost was chosen as the final model due to its outstanding performance across all evaluation metrics. It achieved the highest balance of precision and recall, evidenced by an excellent Precision-Recall AUC of 0.89, which indicates its strong capability to differentiate between fraudulent and non-fraudulent transactions. Additionally, the XGBoost model achieved the best F1 score, demonstrating its effectiveness in accurately identifying fraudulent transactions (high recall) while minimizing false positives (high precision). Furthermore, XGBoost had a significantly shorter training time compared to other models, making it not only effective but also efficient and practical for real-world applications.

Future Work

- Model Enhancement: Further tuning and adding additional features to improve performance.

- Ensemble Methods: Exploring combinations of multiple models for better results.